4 Mistakes You Want To Avoid When Writing Tests As A Software Engineer

Testing best practices and bad practices? It's all about understanding when your tests should fail.

Testing is essential in software development.

If you use a strongly typed language, you need tests. If you have a SQL database, you need tests. If you use GraphQL, you need tests.

Automatic tests are a great tool to improve the quality of the code and reduce the incidence of bugs.

If you realize that your code is not easy to test, that should sound like the signal that, maybe, your code is not well written. If you can write simple unit tests for your functions, your code is decoupled enough to be tested autonomously. On the other hand, if you need to mock five different services to test a single function, I suggest you revisit your implementation.

Tests are a great measure of the quality of a codebase.

When choosing a library for one of my projects, I don’t consider GitHub stars a quality indicator. I prefer looking at the tests and the release frequency. The same principle applies when you join a new company and look at the product's codebase.

There are tests, best practices, and bad practices.

In this post, I explain four bad practices I’ve seen in open-source code and companies I worked with, both as an employee and consultant. Hopefully, this will help you avoid the same mistakes.

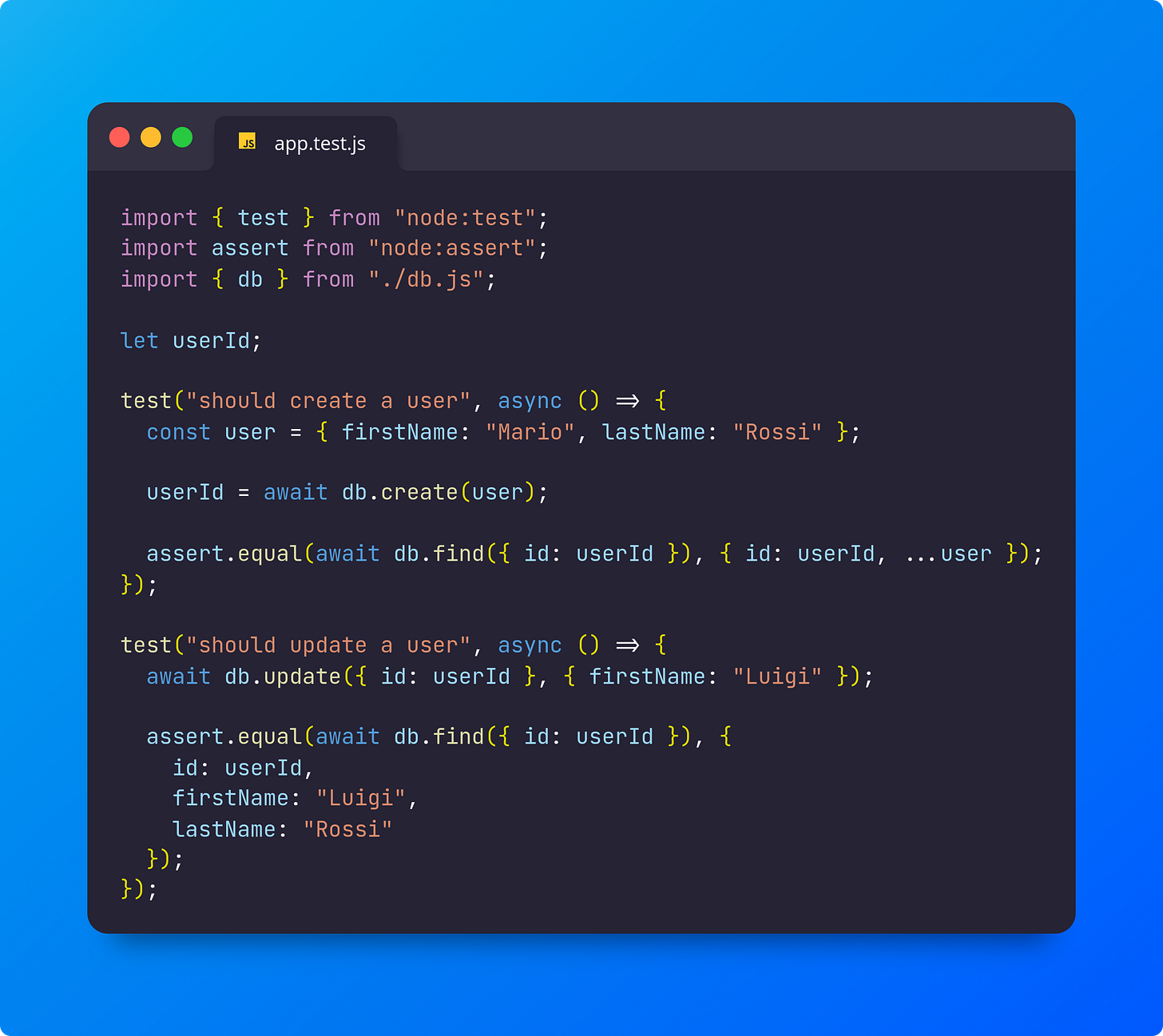

1. Tests Depending On Each Other

The above image shows two tests. As you can see, both tests depend on the variable userId. The first sets the variable, and the second expects it to be populated. This means it will fail if the second test runs without the first one.

When you write tests, you should always consider them running in the following conditions:

Alone.

In random order.

In parallel.

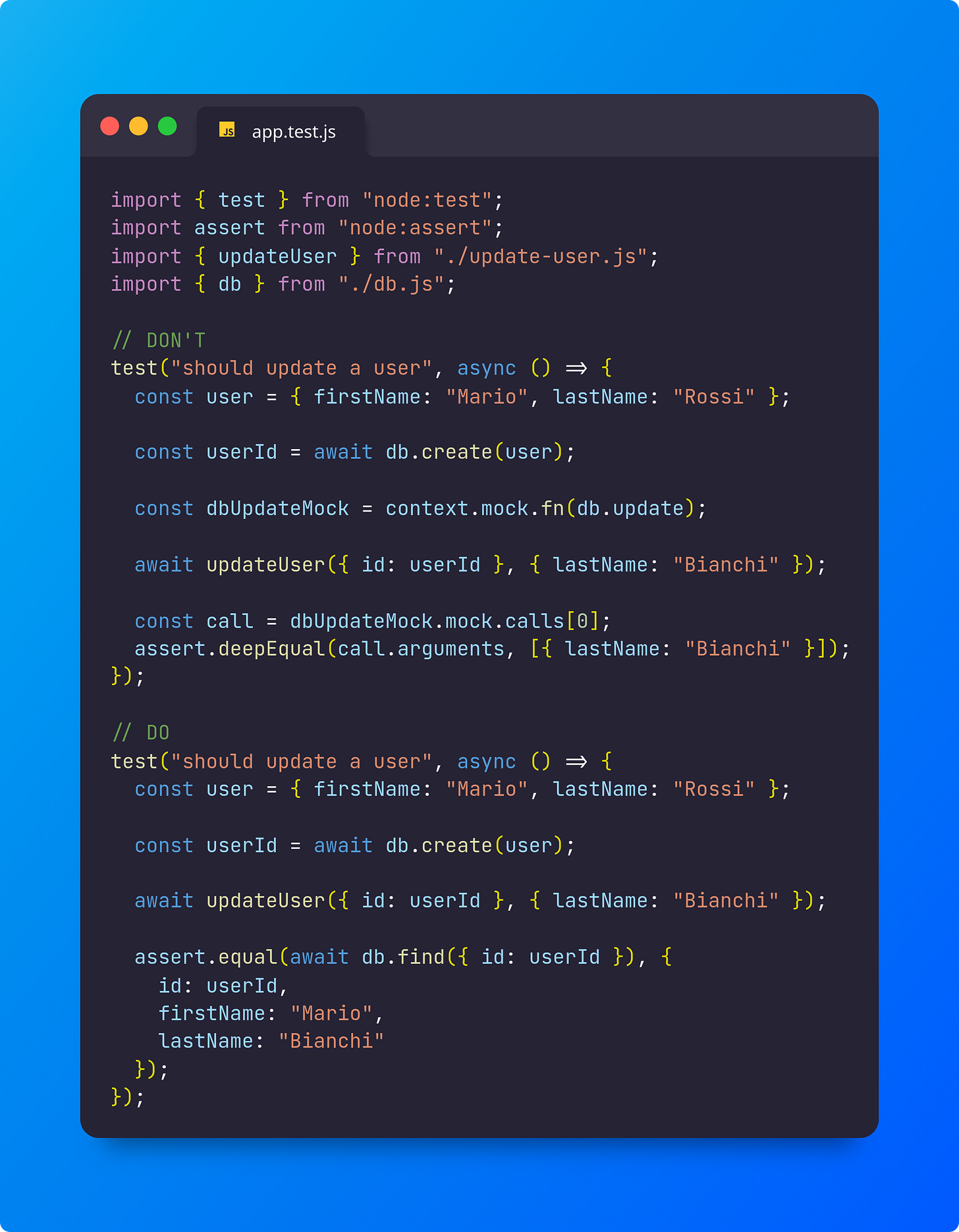

2. Testing Coupled To The Implementation

The above example shows two different implementations of the same test. Both tests verify the same function updateUser.

In the first test, the verification is done by ensuring that the database's update method is called with the correct arguments.

In the second test, the verification is done by checking that the expected value is correctly written to the database.

While both tests look correct, there is a significant difference between the two.

The first test assumes that the updateUser function achieves its purpose in a particular way. That test verifies that the function is implemented in a certain way and has knowledge of how it works internally.

The second test considers the same function as a black box. It doesn’t know how the function achieves its goal; it just ensures that the result is expected.

In the first test, we test the implementation.

In the second test, we test the behavior.

Your tests should always test the behavior, never the implementation.

Testing the implementation means that your tests might fail in case of refactoring, even if the result remains the same. A test should not be coupled to implementing your code; it should always consider the function it tests as a black box and verify the output after a given input.

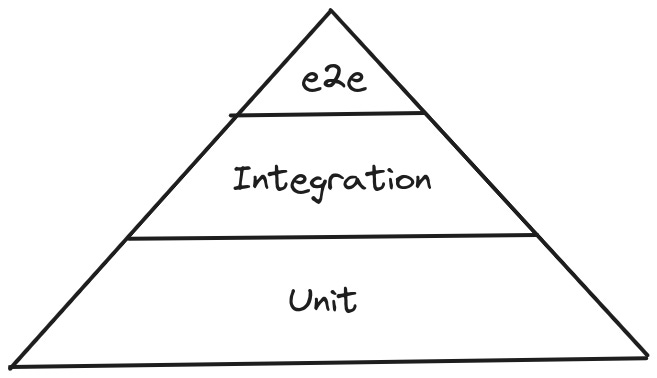

3. Unclear Test Types

The testing pyramid is famous.

Reading online, you can find different opinions about that. I am not rigid about it, but clarifying which type of tests we are writing can help.

e2e tests are supposed to replicate the production environment as much as possible.

Use the real database, make API calls, test the UI, and authenticate your fake user. Avoid shortcuts to make them faster if this means you get farther from a real production setup.

Integration tests should test how modules interact with each other.

If you mock all the external modules, your tests will not be reliable. Changing a module's public API should cause the test to fail.

Unit tests should test atomic units.

Ideally, pure functions. No mocks, if possible.

4. Ask Yourself: When Should It Fail?

When writing tests, I always ask myself this question:

When should it fail?

Keeping this in mind helps me write robust and reliable tests. A test is valuable when it fails. If a test passes, there is no guarantee that the code doesn’t contain bugs.

When a test fails, you have to be sure that it is caused by a bug in the code, not a poorly written test.

Read Next:

How to improve your design skills using Test-Driven Development and Top-Down approach

What is TDD Test-Driven Development is the practice used to write software where tests drive the design of the software. This means that, instead of writing working code and, in the end, testing it, you do the opposite. You write a test and then write the code the test needs to pass. The code grows together with the tests, and when your code is complete, …

What is cohesion in software engineering, and how to design cohesive software

Photo by Radowan Nakif Rehan on Unsplash What is Cohesion Cohesion in computer science is defined as "the degree to which elements inside a module belong together" (Wikipedia). Cohesion expresses how we group related code. Related codes should stay together; by “related code,” we mean code that changes together. Cohesion is the degree to which code that ch…